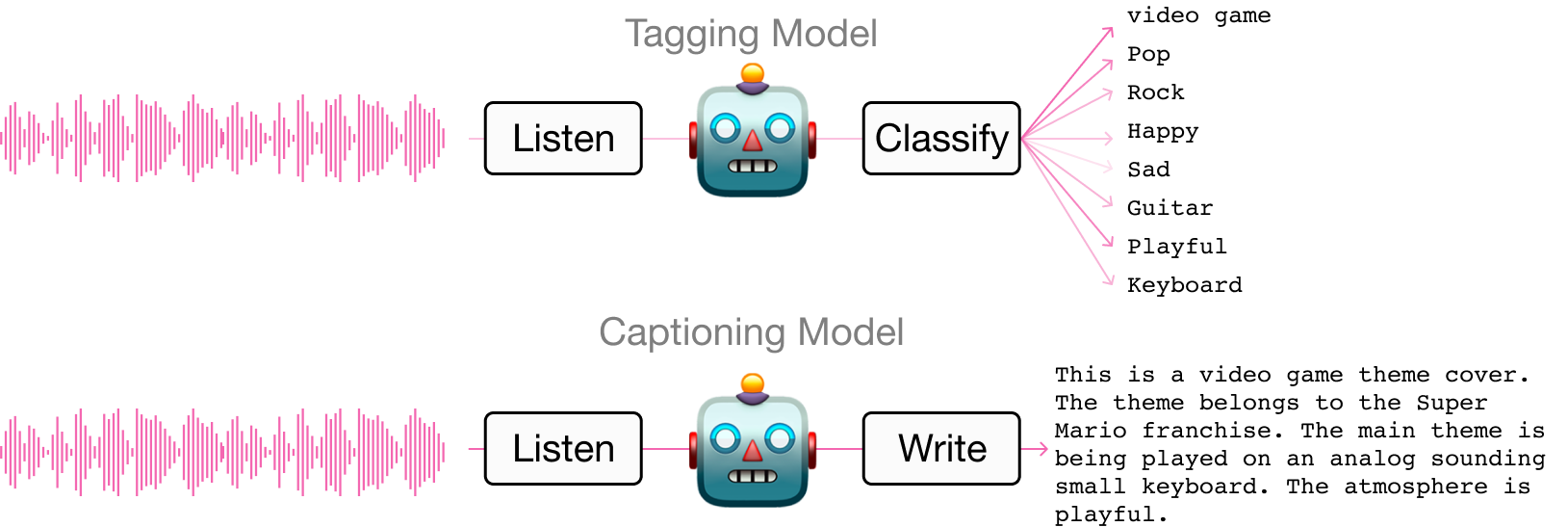

Music Tagging and Captioning

Main Contributors: Seungheon Doh

In the realm of music understanding, the tasks of music tagging and captioning have emerged as critical components for enhancing our interaction with audio content. This research embarks on a multifaceted exploration with specific objectives aimed at advancing the capabilities of music understanding.

Music Captioning

In the pursuit of enhancing the interaction between machines and humans in the realm of music, music captioning emerges as a transformative task. This involves the generation of descriptive language for a given piece of music, encapsulating a diverse array of categories such as genre, mood, style, theme, audio quality, and sound. The primary objective of this research is to develop a comprehensive framework for language generation that extends beyond mere description, contributing to tasks like playlist title generation and establishing a versatile and human-like interaction model.

Through this exploration of music captioning, our research seeks to bridge the gap between technical understanding and human expression in the realm of music. By extending the capabilities of language generation to diverse categories and applications, we aim to pave the way for a more immersive and personalized interaction between individuals and the vast world of musical content.

Related Publications

-

The Song Describer Dataset: a Corpus of Audio Captions for Music-and-Language Evaluation

Ilaria Manco, Benno Weck, SeungHeon Doh, Minz Won, Yixiao Zhang, Dmitry Bogdanov, Yusong Wu, Ke Chen, Philip Tovstogan, Emmanouil Benetos, Elio Quinton, György Fazekas, and Juhan Nam

Workshop on Machine Learning for Audio, Neural Information Processing Systems (NeurIPS), 2023 [paper] -

LP-MusicCaps: LLM-Based Pseudo Music Captioning

Seungheon Doh, Keunwoo Choi, Jongpil Lee and Juhan Nam

Proceedings of the 24th International Society for Music Information Retrieval Conference (ISMIR), 2023

[paper] [code] [demo] [dataset] -

Music Playlist Title Generation Using Artist Information

Haven Kim, Seungheon Doh, Junwon Lee, Juhan Nam

AAAI-23 Workshop on Creative AI Across Modalities, 2023 [paper] -

Music Playlist Title Generation: A Machine-Translation Approach

Seungheon Doh, Junwon Lee, and Juhan Nam

Proceedings of the 2nd Workshop on NLP for Music and Spoken Audio (NLP4MuSA), 2021 [paper] [video]

Music Tagging

In the intricate landscape of music understanding, music tagging emerges as a pivotal task, representing a bridge between the realms of auditory expression and musical text label. The overarching goal of this research is to contribute to the development of a generalized music understanding model by establishing robust connections between music and language. In contrast to current practices reliant on specific datasets or fixed sets of tags, our focus is on designing a versatile tagging model capable of comprehending diverse datasets and labels.

1. Large Vocabulary Music Tagging

One key objective of our research is to pioneer advancements in large vocabulary music tagging. Rather than confining the tagging process to a predetermined set of tags, we aim to develop a model with the capacity to handle a broad and dynamic vocabulary.

2. Zero-shot Music Tagging

By instilling adaptability and generalization capabilities, the model learns to extrapolate its understanding to unseen genres or novel musical expressions, contributing to a more versatile and adaptable music understanding framework.

3. Generalizable Music Pretrain Model

A cornerstone of our research lies in the development of a generalizable audio encoder, a fundamental component in the architecture of music understanding models. Rather than relying on fixed datasets, we seek to train an audio encoder that can adapt to diverse musical styles and musical informations. This entails a departure from rigid models, fostering adaptability and scalability to accommodate the ever-expanding landscape of musical diversity.

Related Publications

-

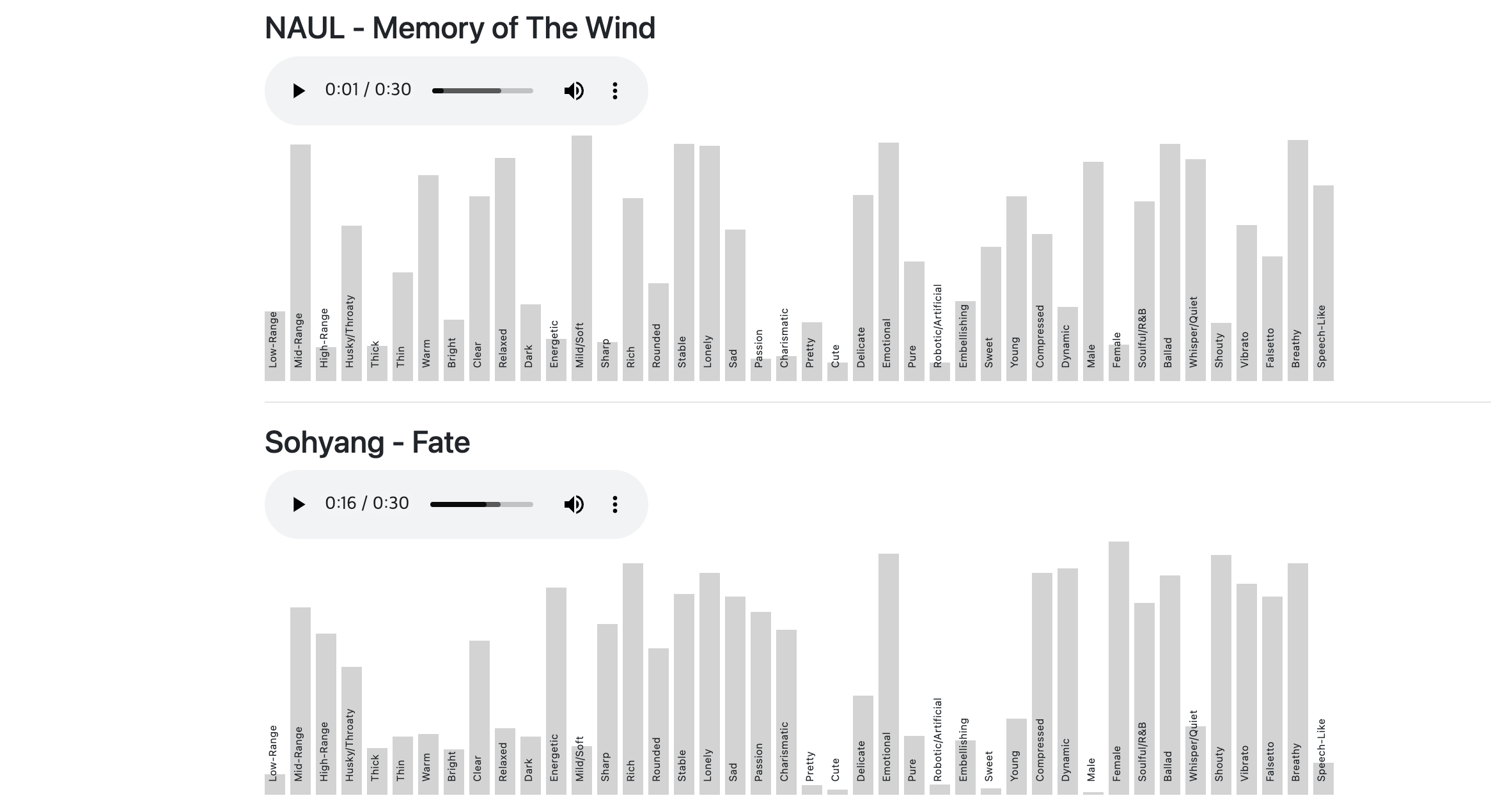

Semantic Tagging of Singing Voices in Popular Music Recordings

Keunhyoung Luke Kim, Jongpil Lee, Sangeun Kum, Chae Lin Park, and Juhan Nam

IEEE/ACM Transactions on Audio, Speech and Language Processing, 2020 [paper] [code] -

Zero-shot Learning for Audio-based Music Classification and Tagging

Jeong Choi, Jongpil Lee, Jiyoung Park, and Juhan Nam

Proceedings of the 20th International Society for Music Information Retrieval Conference (ISMIR), 2019 [paper] [code] -

Zero-shot Learning and Knowledge Transfer in Music Classification and Tagging

Jeong Choi, Jongpil Lee, Jiyoung Park, and Juhan Nam

Machine Learning for Music Discovery Workshop, the 36th International Conference on Machine Learning (ICML), 2019 [paper]