The Music and Audio Computing Lab (MACLab) is a music research group in the Graduate School of Culture Technology at KAIST. Our mission is to improve the way people enjoy, play, and create music through technology. We are particularly interested in "Music AI" that can understand music, represent its meanings in a human-friendly manner, and generate new musical content to assist human creativity. The following are the main research areas and broad research topics that we are working on:

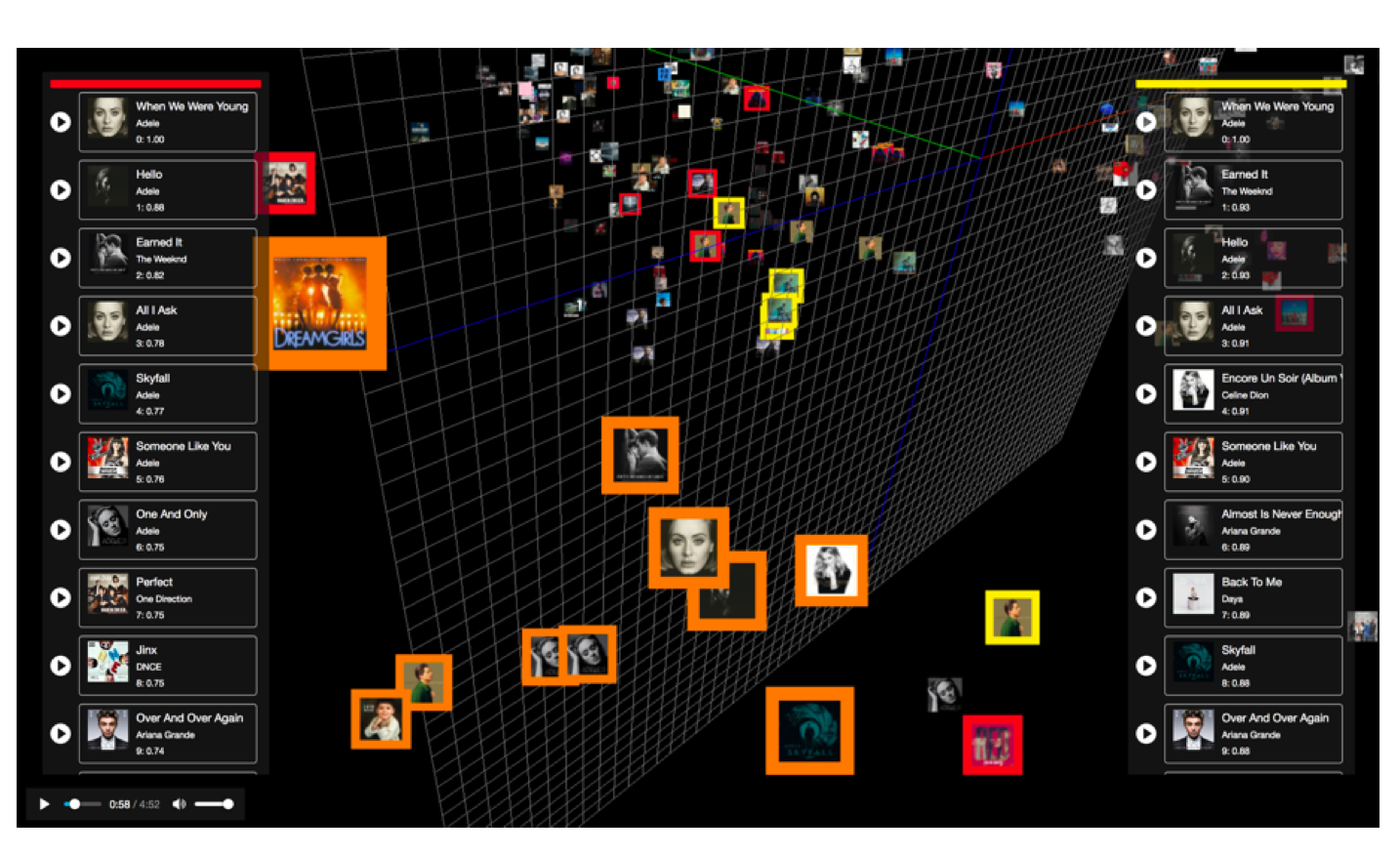

- Music Informational Retrieval

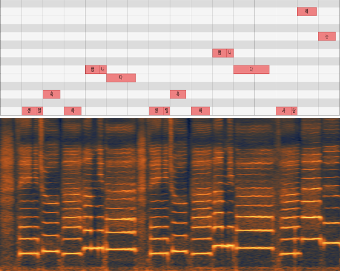

- Audio Signal Processing

- Machine Learning and Deep Learning for Music and Audio

- Human-AI Interaction for Music

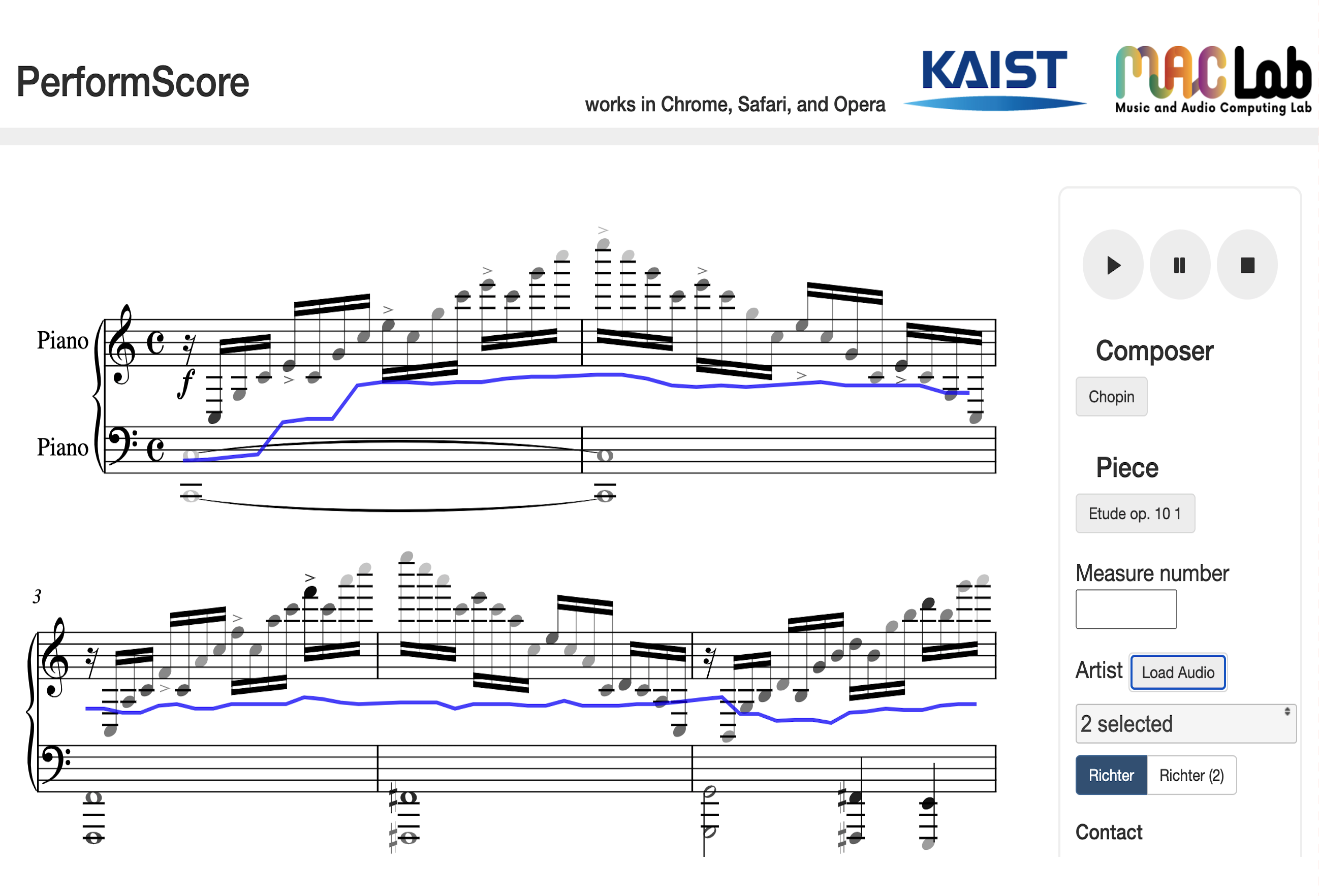

- Computational Analysis and Modeling of Music Performance

- Sound Synthesis and Digital Audio Effects

Research Center and Academic Events

For Prospective Students

If you are interested in applying to KAIST and joining our group, please read this FAQ article for Korean Students or International Students.

News

- [Aug-12-2025] A Group of high school students from the APEC Youth STEM Conference KAIST Academic Program visit our lab. [link]

- [Jul-16-2025] Our lab members (including Prof. Nam) perform music at the KAIST Atrium Concert. [link]

- [Jul-07-2025] The outcome of our industry project with Kakao entertainment is deployed in Melon's new music recommendation service. [link]

- [Jun-30-2025] Prof. Bo Li (Indiana University, Jacobs School of Music) and Prof. Sujin Kim (Carnegie Mellon University, School of Art) visit our lab and present their research and artworks.

- [Jun-23-2025] Prof. Nam is promoted to full professor as of Septermber 2025!

- [Jun-16-2025] Dr. Keunwoo Choi joins the Graduate School of Culture Technology of KAIST as an adjunct professor! [link]

- [Jun-07-2025] 5 papers have been accepted to ISMIR 2025!

- [May-28-2025] A group of students from the CN Yang Scholars Programme (CNYSP) of Nanyang Technological University (NTU), Singapore visit our lab [link]

- [Apr-18-2025] Our AI Piano performance team presents a talk and performance at the World Science Culture Forum 2025. [link]

- [Apr-18-2025] We organize the Korean Society for Music Informatics Inaugural Symposium at KAIST. [link]

- [Apr-15-2025] Sumi Jo Performing Arts Research Center organizes a joint performance, artist talk, and research talk titled "Jazz Composition and Improvisation in Juxtaposition with Large Language Models " by inviting Jazz Pianist Hayoung Lyou and Dr. Keunwoo Choi. [link]

- [Feb-24-2025] Prof. Nam is appointed as KAIST Endowed Chair Professor for 3 years.

- [Feb-07-2025] Our lab's Music AI research is introduced in the KAIST's official YouTub channel. [link]

- [Jan-23-2025] We organized our lab classical concert!

- [Jan-22-2025] Dr. Yiğit Özer (PhD at Prof. Müller' AudioLab, now research intern at Sony AI) visit our lab and presents his research.

- [Dec-12-2024] Wootaek and Seongheon uccessfully defended their PhD theses! Congratulations!

- [Sep-28-2024] We participated in "KAISTxDaejeon Arts Center Performance Laboratory, X-Space" and organized the follow-up symposium [link]

- [Sep-07-2024] Our undergrad research student, Gyubin, with Hounsu and Junwon, received the Best Student Award at DAFx 2024! [link]

- [Jul-01-2024] Jiyun begins her summer internship at Yamaha R&D (Hamamatsu, Japan). Congratulations!

- [Jun-05-2024] We showcase our AI performance systems with world-renowned soprano Sumi Jo (조수미) at Innovate Korea 2024 [link].

- [Jun-04-2024] We receive gift fund from Adobe Research [link].

- [May-27-2024] Seungheon begins his summer internship at Adobe Research (San Francisco, US). Congratulations!

- [May-20-2024] Dr. Minz (Suno, Research Scientist)Won gives a talk about music representation learning in the GCT634 class.

- [Apr-22-2024] Prof. George Fazekas (Queen Mary University of London) and Prof. Vipul Arora (IIT Kanpur) visit our lab.

- [Apr-08-2024] Two PhD students from Prof. Meinard Müller group (Johannes Zeitler and Simon Schwär) visit our lab.

- [Jan-17-2024] Dr. Seung-Goo Kim (Max Planck Institute for Empirical Aesthetics) visits gives a talk about Neural Encoding of Musical Emotions (jointly hosted with the Music and Brain Lab).

- [Dec-14-2023] 7 papers have been accepted to ICASSP 2024!

- [Dec-12-2023] Sangeon, Taegyun, and Taejun successfully defended their PhD theses! Congratulations!

- [Dec-01-2023] We organize the International Symposium on AI and Music Performance (ISAIMP 2023) at KAIST [link].

- [Nov-05-2023] Seungheon's ISMIR paper has been nominated for the Best Paper Award at ISMIR 2023! Congratulations!

- [Oct-22-2023] Taejun won the Best Student Paper at WASPAA 2023! Congratulations! [link]

- [Oct-10-2023] Our AI piano team participated in the 20th anniversary concert of Daejeon Art Center with flute player Jasmin Choi (최나경) [link].

- [Sep-22-2023] Hayeon and Yonghyun participate in the concert "Four Seasons 2050" project at KAIST [link].

- [Aug-23-2023] Three KAIST Labs (MACLab, MubLab, and AirisLab) visit Yamaha HQ to particiapte the KAIST-Yamaha joint workshop [link].

- [Aug-21/22-2023] Prof. Nam gives talks at the University of Tsukuba (Prof. Hiroko Terasawa's lab) and AIST (Dr. Masataka Goto's group).

- [Aug-11-2023] We invite Hanoi Hantrakul (Bytedance) to KAIST. He gives a talk titled "Transcultural Machine Learning in Music" [link].

- [Jul-31-2023] Seungheon receives Kim Young Han Global Scholarship [link].

- [Jul-20-2023] We participate the Music and Audio Workshop 2023 [link].

- [Jun-28-2023] Our AI Piano Team showcased an AI-based performance system with world-renowned soprano Sumi Jo (조수미) [link].

- [Jun-21-2023] Two papers have been accepted to ISMIR 2023!

- [Jun-19-2023] Prof. Nam gives keynote speech at YAMAHA Global R&D Meetup 2023 [link]

- [May-09-2023] Prof. Nam has been appointed as an associate editor of of the IEEE/ACM Transactions on Audio, Speech, and Language Processing [link].

- [May-03-2023] Younghyun and Hyeyoon had a piano recital at the KAIST Auditorium [link].

Address: 291 Daehak-ro, Yuseong-gu, Daejeon (34141)

N25 #3236, KAIST, South Korea