Symbolic Music Generation

Main Contributors: Eunjin Choi and Seolhee Lee

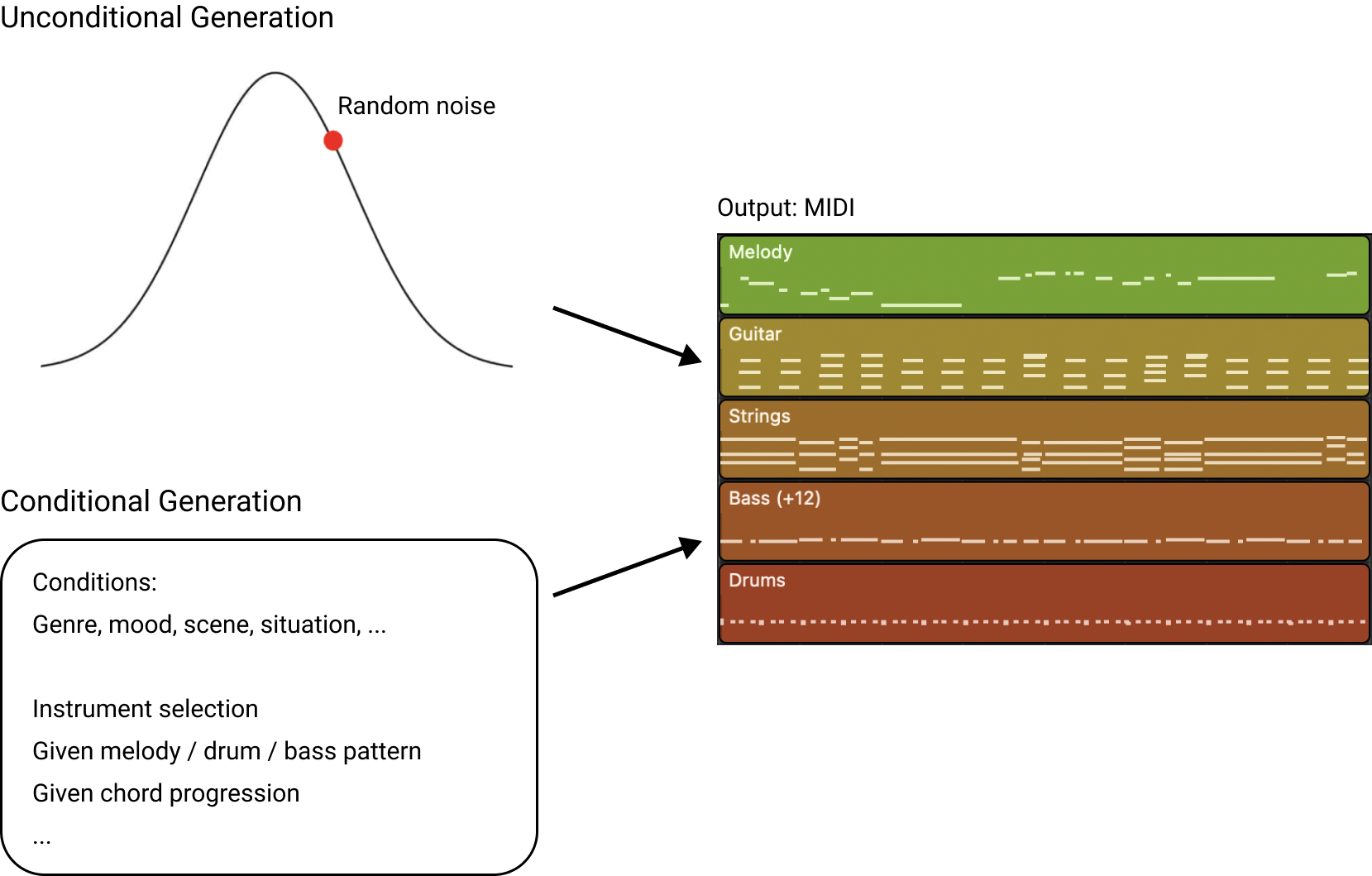

Music generation has received great attention from researchers for decades. The history of automatic music composition is very old, starting with Mozart's musical dice game in 18th century, and music generation has been mostly studied as rule-based approach. But with the recent revival of deep learning, music generation has been actively carried out by a data-driven approach. The process of music generation can be divided into three parts: score generation, performance generation, and sound generation. Above all, symbolic music generation is aimed at generating a score, which is a notation-based format of music representation.

Symbolic Music Generation

80s' Game Background Music

Game Background Music plays a large part in shaping the overall atmosphere of the game. Also, it is an element of the game that has a very large impact on players' experience. However, a larger amount of background music is needed when the number of game maps and situations increases and characters become more diverse. Therefore, there is a demand for creating background music for games more easily and diversely. In addition, game music is characterized by relatively clear composition intentions because game music is composed for a specific map, character, and situation in a game. Therefore, game music is also suitable for conditional music generation. Currently, we are working on generating multi-instrumental 80's game music, which has a good advantage of being able to preserve the original composer's intention and converting it into a sheet music dataset.

Piano Music Generation

Piano music is polyphonic music in which several notes are played simultaneously. It usually consists of a melody, harmonic accompaniment, or ornaments added to embellish the principal notes. We focus on music generation research that can control each of these parts. There are various approaches for controllable music generation models such as accompaniment or chord progression generation for a given melody, style transfer and accompaniment arrangement. For these studies, it is also necessary to build a dataset with rich annotation that can distinguish each part. Therefore, additional annotation work is also being performed on EMOPIA, a pop piano music dataset.

Related Publications

-

Teaching Chorale Generation Model to Avoid Parallel Motions

Eunjin Choi, Hyerin Kim, Juhan Nam, and Dasaem Jeong

Proceedings of the 16th International Symposium on Computer Music Multidisciplinary Research (CMMR), 2023 [paper] -

Bridging Audio and Symbolic Piano Data through a Web-Based Annotation Interface

Seolhee Lee, Eunjin Choi, Joonhyung Bae, Hyerin Kim, Eita Nakamura, Dasaem Jeong, and Juhan Nam

Late Breaking Demo in the 24th International Society for Music Information Retrieval Conference (ISMIR), 2023 [paper][video] -

YM2413-MDB : A Multi-Instrumental FM Video Game Music Dataset with Emotion Annotations

Eunjin Choi, Yoonjin Chung, Seolhee Lee, Jong Ik Jeon, Taegyun Kwon, and Juhan Nam

Proceedings of the 23nd International Society for Music Information Retrieval Conference (ISMIR), 2022 [paper] [website] -

EMOPIA: A Multi-Modal Pop Piano Dataset For Emotion Recognition and Emotion-based Music Generation

Hsiao-Tzu Hung, Joann Ching, Seungheon Doh, Nabin Kim, Juhan Nam, and Yi-Hsuan Yang

Proceedings of the 22nd International Society for Music Information Retrieval Conference (ISMIR), 2021 [paper] [dataset]