Singing Voice Tagging and Embedding Learning

Main Contributor: Keunhyoung Kim and Kyungyun Lee

Singing voice has been the primary musical instrument in human history. Along with the development of expressive and perceptual competency of singing voice, its ability to deliver complicated and delicate feelings takes the most important part in diverse genres of music, especially in popular music. Traditional studies on high-level quality of singing voice in popular music have limitations in modeling the complicated timbre space due to the multifaceted nature of the music and the lack of voice-specific data. Recent advences of deep learning techniques and accessible large-scale datasets have provided the avenue to better quantify the timbre space of singing voice. This research topic aims to make use of the recent advances and analyze singing voice focusing on popular music.

Audio Embedding Learning for Singing Voices

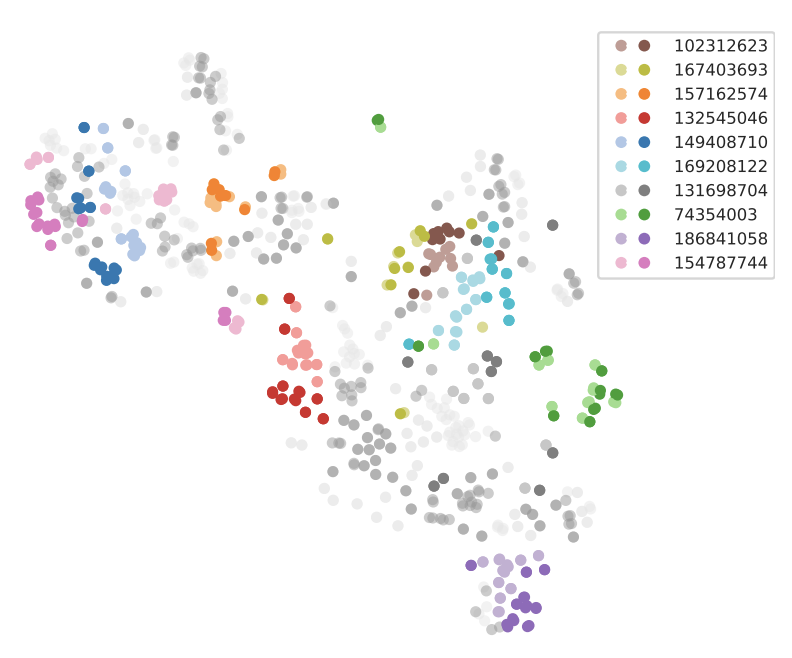

Vocal is the central part of a song in popular music and thus the music composition and production are highly correlated with the quality of singing voice. This topic investigates cross-domain audio embedding space between vocal and mixed audio using deep neural networks to application to vocal-oriented music information retrieval tasks such as vocal retreival, vocal tagging, and artist classification.

Singer identification space from the mono-to-mix-cross embedding space. The label numbers are singer IDs. The colors on the left column refers to monophonic vocal tracks and the right column refers to mixed tracks.

Related Publications

-

Learning a Cross-Domain Embedding Space of Vocal and Mixed audio with a Structure-Preserving Triplet Loss

Keunhyoung Kim, Jongpil Lee, Sangeun Kum, and Juhan Nam

Proceedings of the 22nd International Society for Music Information Retrieval Conference (ISMIR), 2021 [paper] [website] -

Learning a Joint Embedding Space of Monophonic and Mixed Music Signals for Singing Voice

Kyungyun Lee and Juhan Nam

Proceedings of the 20th International Society for Music Information Retrieval Conference (ISMIR), 2019 [paper] [website] [code] -

A Hybrid of Deep Audio Feature and i-vector for Artist Recognition

Jiyoung Park, Donghyun Kim, Jongpil Lee, Sangeun Kum, and Juhan Nam

Joint Workshop on Machine Learning for Music, International Conference on Machine Learning (ICML), 2018 [paper] -

Revisiting Singing Voice Detection: a Quantitative Review and the Future Outlook

Kyungyun Lee, Keunwoo Choi, and Juhan Nam

Proceedings of the 19th International Society for Music Information Retrieval Conference (ISMIR), 2018 [paper] [code]

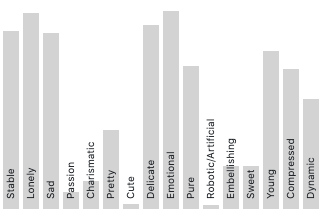

Semantic Tagging of Pop Music Vocals

This topic addresses the qualitative analysis of singing voice as a music auto-tagging task that annotates songs with a set of tag words. Defining a vocabulary that describes timbre and singing styles focusing on K-pop vocalists and collecing human annotations for individual tracks, we conducted statistical analysis to understand the global and temporal characteristics of the tag words. Also, we built a music tagging model that automatically predicts the voice-specific tags from popular music recordings.

Pop Music Vocal Tags

Related Publications

-

Semantic Tagging of Singing Voices in Popular Music Recordings

Keunhyoung Luke Kim, Jongpil Lee, Sangeun Kum, Chae Lin Park, and Juhan Nam

IEEE/ACM Transactions on Audio, Speech and Language Processing, 2020 [paper] [website/dataset] -

Building K-POP Singing Voice Tag Dataset: A Progress Report

KeunHyoung Luke Kim, Sangeun Kum, Chae Lin Park, Jongpil Lee, Jiyoung Park, and Juhan Nam

Late Breaking Demo in the 18th International Society for Musical Information Retrieval Conference (ISMIR), 2017 [paper]