Musical Cue Detection

Main Contributors: Jaeran Choi, Taegyun Kwon

In ensemble performances, visual cues like gestures are vital for synchronization, especially during starts or fermatas with rapid tempo changes. The challenge arises with AI pianist in ensembles, as the absence of a human pianist renders traditional cues ineffective.

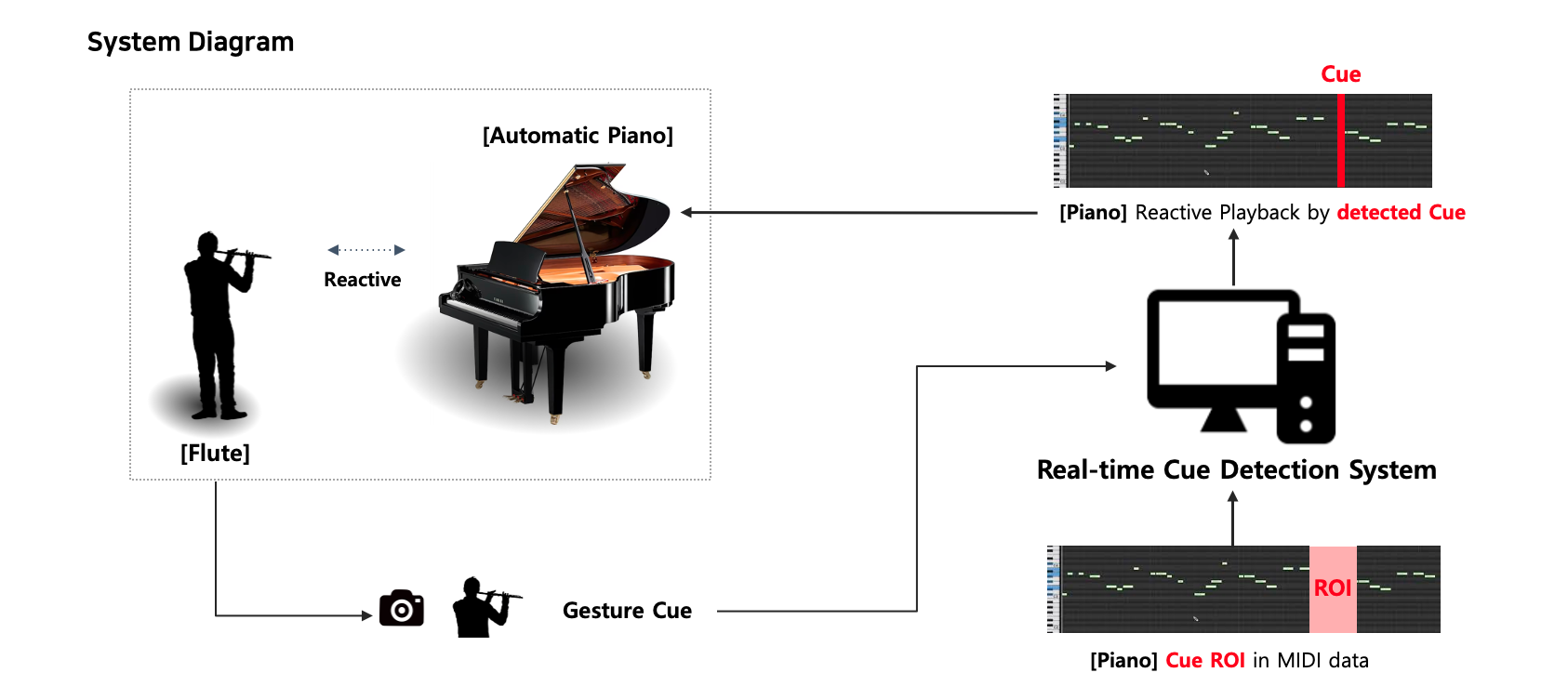

This research introduces a real-time gesture cue detection system that overcomes the limitation by using video to capture the performer's gestures in real-time, which initiates the reactive piano accompaniment.

Musical Cue Dataset

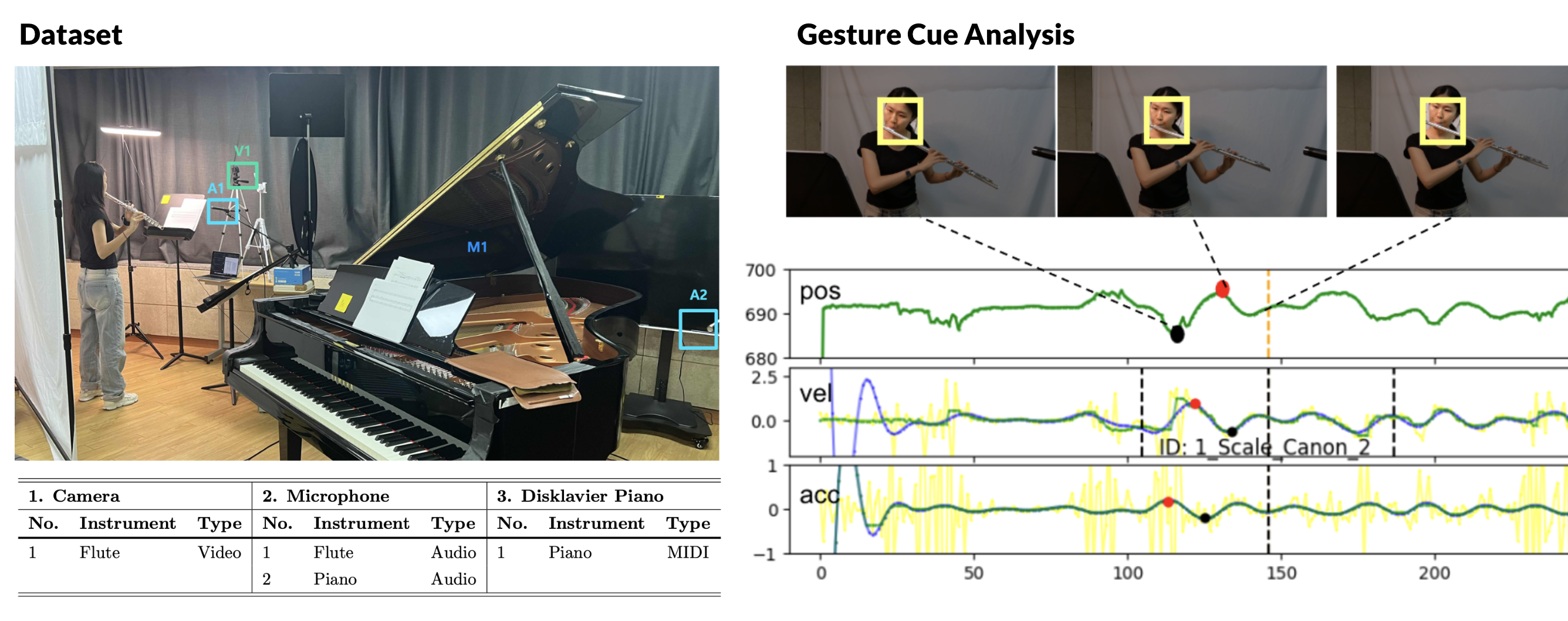

In order to analyze the musical cues of flute-piano duets, we created a dataset that included video, audio, and MIDI data of flute-piano duet performances to analyze the gesture cue of flutists.

Gesture Cue Analysis

We developed an algorithm to identify gesture cues by analyzing the flutist's movements in the flute-piano duet dataset, utilizing optical flow and face landmark detection to extract motion features. We tested the algorithm with dataset videos to understand the correlation between gesture cues and flute onset timing. Based on the results of the analysis, we set the optimal accompaniment start timing according to the gesture cue duration and applied it to the reactive accompaniment system.

Real-time Gesture Cue Detection System

In this study, we created a system that detects gesture cues in real-time for duets between a flutist and a computer-controlled piano. Using a camera, the system captures the flutist's gestures and triggers the piano accompaniment MIDI files at the right moment. The gesture cue detection is based on the flutist's facial motion velocity curve, identifying a gesture pattern between its maximum and minimum peaks. We used a cue-onset ratio from the result of the gesture analysis to set the piano's onset time.

Performances

To test the system in a concert setting, we developed an interactive ensemble system enabling interaction between an AI Pianist and a Human Flutist. Jaeran Choi and Taegyun Kwon developed a real-time gesture cue detection system. Junhyung Bae made the visualization of cue detection status, Jiyun Park and Yonghyun Kim developed a system that synchronizes visuals with the cue system in real-time. For the AI Pianist part, the piano transcription model by Taegyun Kwon was used.

-

20th Anniversary Concert of the Daejeon Arts Center with Flutist Jasmine Choi

Jaeran Choi, Taegyun Kwon, Junhyung Bae, Jiyun Park, Yonghyun Kim and Juhan Nam -

International Symposium on AI and Music Performance(ISAIMP) 2023 Lecture Concert

Jaeran Choi, Taegyun Kwon, Junhyung Bae, Jiyun Park, Yonghyun Kim and Juhan Nam

Related Publications

-

Real-time Flutist Gesture Cue Detection System for Auto-Accompaniment

Jaeran Choi, Taegyun Kwon, Joonhyung Bae, Jiyun Park, Yonghyun Kim, Juhan Nam

Late Breaking Demo in the 25th International Society for Music Information Retrieval Conference (ISMIR), 2024 [paper]