Deep Audio Embedding Learning for Music

Main Contributors: Jongpil Lee and Jiyoung Park

Deep representation learning offers a powerful paradigm for mapping input data onto an organized embedding space and is useful for many music information retrieval tasks. Two central methods for representation learning include deep metric learning and classification, both having the same goal of learning embedding space that can generalize well across tasks. This research topic explores deep audio embedding learning for music particularly with application to content-based music retrieval.

Disentangled Audio Embedding Space for Music

Disentangled Representation Learning

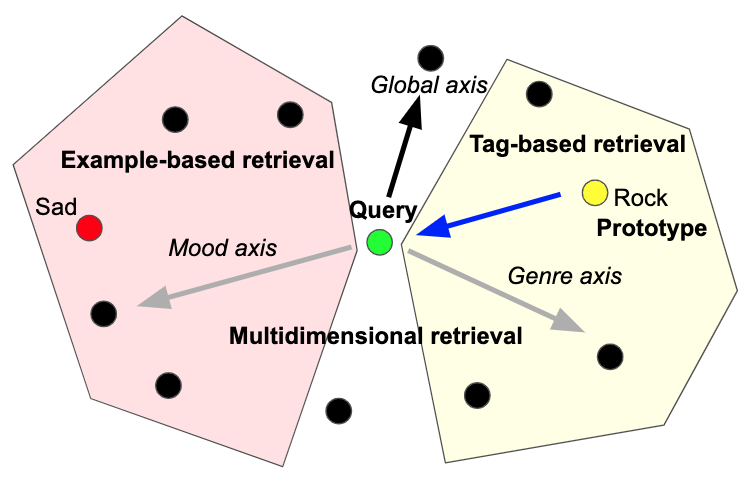

Disentangled representation learning allows multiple semantic concepts in music (e.g., genre, mood, instrumentation) to be learned jointly but remain separable in the learned embedding space. This enables conditional similarity search in a particular concept, which can be applied to various scenarios of music search

Related Publications

-

Metric Learning VS Classification for Disentangled Music Representation Learning

Jongpil Lee, Nicholas J. Bryan, Justin Salamon, Zeyu Jin, and Juhan Nam

Proceedings of the 21st International Society for Music Information Retrieval Conference (ISMIR), 2020 [paper] -

Disentangled Multidimensional Metric Learning for Music Similarity

Jongpil Lee, Nicholas J. Bryan, Justin Salamon, Zeyu Jin, and Juhan Nam

Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2020 [paper] [dataset]

Representation Learning Using Meta Data

Supervised music representation learning has been performed mainly using semantic labels such as music genres. However, annotating music with semantic labels requires time and cost. In this work, we investigate the use of meta data such as artist, album, and track information, which can be obtained from the music track. We show that the free-to-use labels are effective means for music representation learning.

Related Publications

-

Representation Learning of Music Using Artist, Album, and Track information

Jongpil Lee, Jiyoung Park, and Juhan Nam

Machine Learning for Music Discovery Workshop, the 36th International Conference on Machine Learning (ICML), 2019 [paper] -

Representation Learning of Music Using Artist Labels

Jiyoung Park, Jongpil Lee, Jangyeon Park, Jung-Woo Ha, and Juhan Nam

Proceedings of the 19th International Society for Music Information Retrieval Conference (ISMIR), 2018 [paper] [code]