CNN Architectures for Music Classification

Main Contributors: Jongpil Lee and Taejun Kim

Music classification is typically handled as an image classification task that takes spectrograms as an image input and predicts class labels. This research topic focuses adapting convolutional Neural Networks (CNN), which is the primary architecture in image classification, to the music domain by considering different characteristics of music audio.

SampleCNN

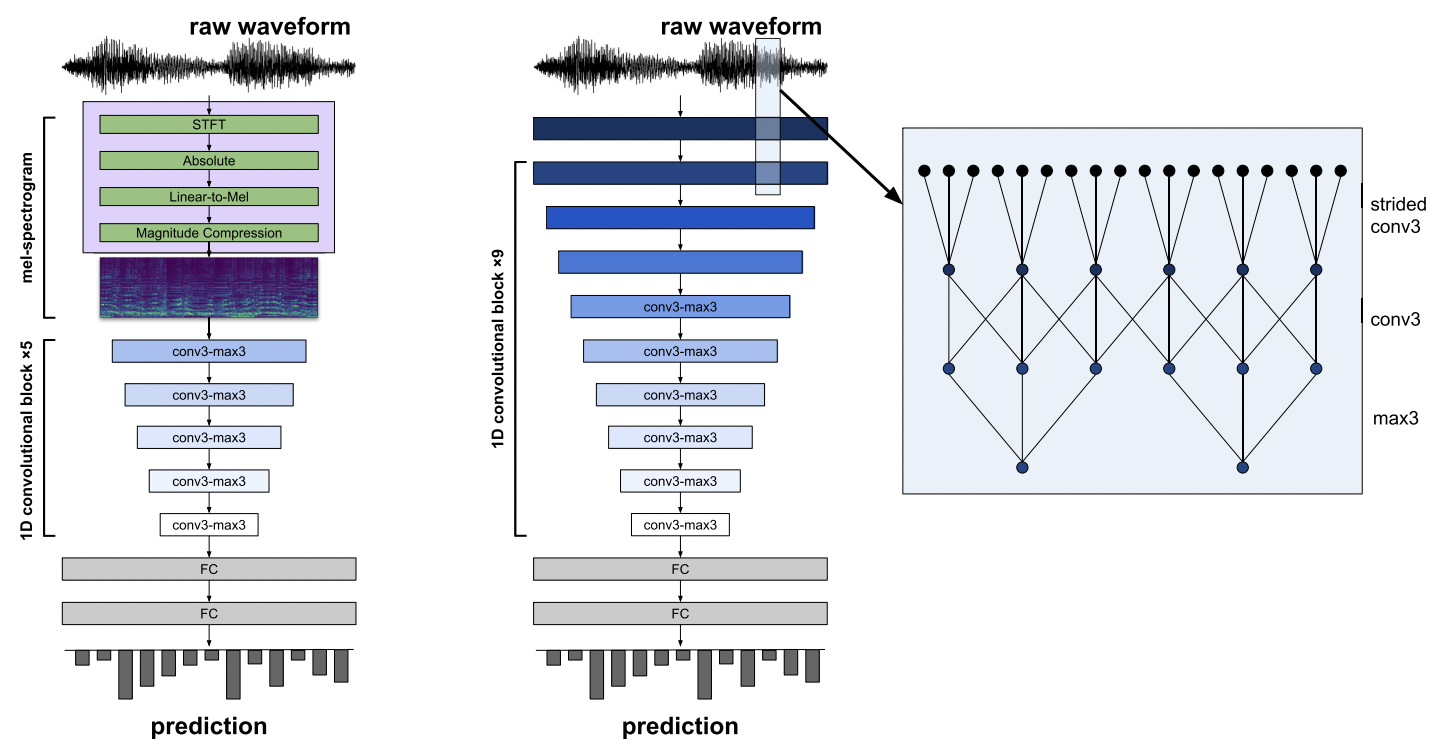

SampleCNN is a CNN architecture that takes raw waveforms directly and have very small sizes of filters. Music classification models typically use mel-spectrograms as input, which are frame-level hand-engineered features. We tried to break the convention using the sample-level CNN. The model configuration is similar to VGGNet that takes raw pixel images and use 3x3 filters. But, the difference is that SampleCNN uses 3x1 filters as it takes 1D waveforms. The effectiveness of SampleCNN was verified in the industry-scale music tagging experiment . In addition, we extended SampleCNN to more general audio classification tasks such as speech commmand recogntions or event sound detctions.

The SampleCNN Model

Related Publications

-

Comparison and Analysis of SampleCNN Architectures for Audio Classification

Taejun Kim, Jongpil Lee, and Juhan Nam

IEEE Journal of Selected Topics in Signal Processing, 2019 [paper] [code] -

Sample-level CNN Architectures for Music Auto-tagging Using Raw Waveforms

Taejun Kim, Jongpil Lee, and Juhan Nam

Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2018 [paper] [code] -

SampleCNN: End-to-End Deep Convolutional Neural Networks Using Very Small Filters for Music Classification

Jongpil Lee, Jiyoung Park, Keunhyoung Luke Kim, and Juhan Nam

Applied Sciences, 2018 [paper] -

Raw Waveform-based Audio Classification Using Sample-level CNN Architectures

Jongpil Lee, Taejun Kim, Jiyoung Park, and Juhan Nam

Machine Learning for Audio Signal Processing Workshop, Neural Information Processing Systems (NIPS), 2017 [paper] -

Combining Multi-Scale Features Using Sample-level Deep Convolutional Neural Networks for Weakly Supervised Sound Event Detection

Jongpil Lee, Jiyoung Park, Sangeun Kum, Youngho Jeong, and Juhan Nam

Proceedings of the 2nd Workshop on Detection and Classification of Acoustic Scenes and Events (DCASE), 2017 [paper] -

Sample-level Deep Convolutional Neural Networks for Music Auto-Tagging Using Raw Waveforms

Jongpil Lee, Jiyoung Park, Keunhyoung Luke Kim, and Juhan Nam

Proceedings of the 14th Sound and Music Computing Conference (SMC), 2017 [paper]

Multi-level and Multi-Scale Models

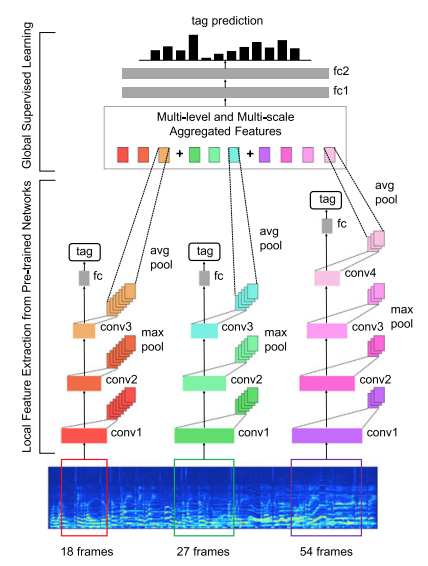

Music auto-tagging is a multi-label classification task that annotates music with diverse notions of semantic labels. Therefore, the labels have different levels of abstractions and different scopes of input context. In addition, the spectrogram of music tracks is much longer than a typical image size. Considering the differences, we proposed a CNN architecture that captures hierarchical features from different input sizes and aggregation them over time.

The Multi-Level and Multi-Scale Feature Aggregation CNN Model

Related Publications

-

Cross-cultural Transfer Learning Using Sample-level Deep Convolutional Neural Networks

Jongpil Lee, Jiyoung Park, Chanju Kim, Adrian Kim, Jangyeon Park, Jung-Woo Ha, and Juhan Nam

Music Information Retrieval Evaluation eXchange (MIREX) in the 18th International Society for Musical Information Retrieval Conference (ISMIR), 2017 [paper]

*** The 1st place in the four K-POP tasks across all algorithms submitted so far *** -

Multi-Level and Multi-Scale Feature Aggregation Using Sample-level Deep Convolutional Neural Networks for Music Classification

Jongpil Lee and Juhan Nam

Machine Learning for Music Discovery Workshop, the 34th International Conference on Machine Learning (ICML), 2017 [paper] -

Multi-Level and Multi-Scale Feature Aggregation Using Pre-trained Convolutional Neural Networks for Music Auto-Tagging

Jongpil Lee and Juhan Nam

IEEE Signal Processing Letters, 2017 [paper]