Expressive Sound Synthesis

Main Contributors: Hounsu Kim

Expressiveness is a fundamental element in music, providing a rich experience to the audience. Modeling expressiveness is challenging due its intricacy and diversity within real-world music data. This research field focuses on modeling musical expressiveness for various musical instruments, leveraging recent deep generative models.

Expressive Acoustic Guitar Sound Synthesis

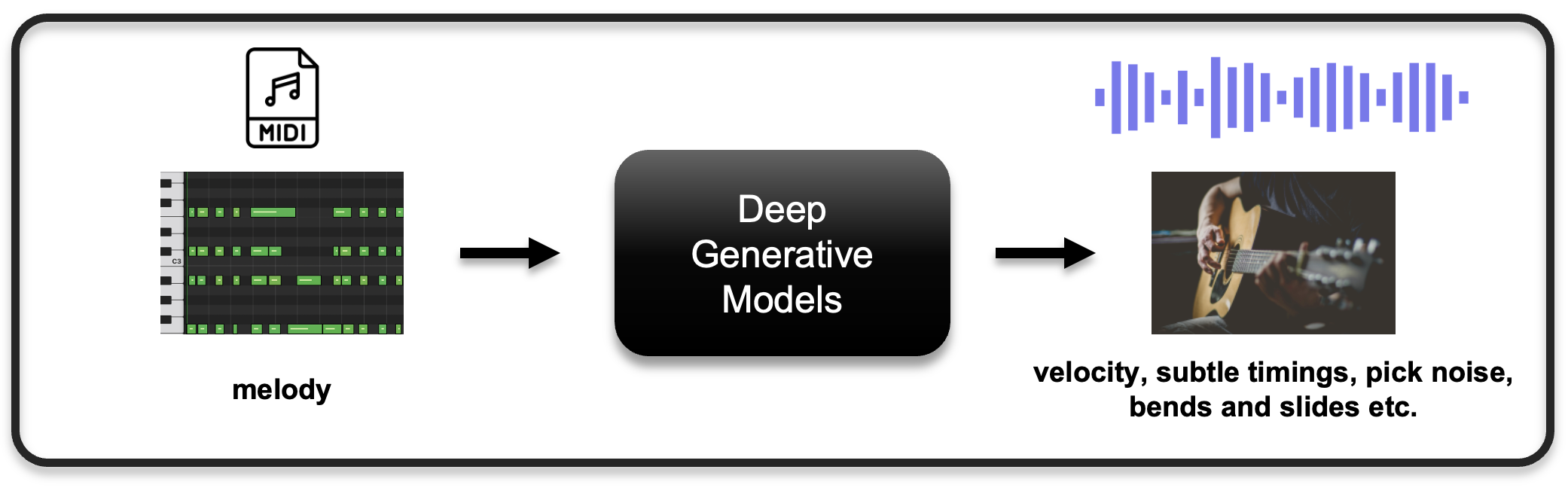

In modern days, music production extensively employs Digital Audio Workstations, where MIDI (Musical Instrument Digital Interface) serves as the primary format for music manipulation.

In these cases, imbuing expressiveness into MIDI to achieve realistic audio rendering is a vital yet challenging task, commonly referred to as MIDI-to-audio synthesis.

Current off-the-shelf methods heavily rely on sample-based synthesis using prerecorded, high-quality audio samples.

However, sample-based synthesis typically lacks the necessary flexibility to faithfully capture the extensive range of acoustic nuances inherent in expressive musical instruments.

This work focuses on the case of acoustic guitar instrument, which is known for exhibiting subtle yet clear differences between audio recordings of realistic performances and those generated solely through MIDI.

Related Publications